TCP Performance problems caused by interaction between Nagle's Algorithm and Delayed ACK

Stuart Cheshire

20th May 2005

This page describes a TCP performance problem resulting from a little-known interaction between Nagle's Algorithm and Delayed ACK. At least, I believe it's not well known: I haven't seen it documented elsewhere, yet in the course of my career at Apple Ihave

run into the performance problem it causes over and over again (the first time being the in thePPCToolbox over TCP code I wrote myself back in 1999), so I think it's about time it was documented.

这篇文章描述了Nagle算法和Delayed ACK 两种机制作用下导致TCP的一个性能问题。至少,我相信很少有人知道:因为很少有文档会讲到这个。

The overall summary is that many of the mechanisms that make TCP so great, likefast retransmit, work best when there's a continuous flow of data for the TCP state machine to work on. If you send a block of data and then stop and wait for an application-layer

acknowledgment from the other end, the state machine can falter. It's a bit like a water pump losing its prime — as long as the whole pipeline is full of water the pump works well and the water flows, but if you have a bit of water followed by a bunch of air,

the pump flails because the impeller has nothing substantial to push against.

整体的总结是,很多的机制,使TCP如此之大,像快速重发,在有一个连续的TCP状态机工作流的时候可以工作的很好。如果您要发送的数据块,然后停止,并等待ACK,状态机就会出问题。这个有点像水泵失去了动力

- 只要为整个管道是装满水,水泵会正常工作并且水会正常流动,但如果有水流中有一堆空气,叶轮失去推力导致水泵会出现异常。

If you're writing a request/response application-layer protocol over TCP, the implication of this is you will want to make your implementation at least double-buffered: Send your first request, and while you're still waiting for the response, generate and

send your second. Then when you get the response for the first, generate and send your third request. This way you always have two requests outstanding. While you're waiting for the response for requestn, requestn+1 is behind it in the return

pipeline, conceptually pushing the data along.

如果你正在编写一个通过TCP请求/响应的应用层协议,这意味着使您的实现至少双缓冲:发送您的第一个请求,当你仍然在等待响应的时候,生成并发送你的第二个数据包。然后,当你获得第一个响应包,生成并发送你的第三个请求。这样里总有2个没有获得响应的请求。当你等待第N个请求的响应的时候,第N

+1个请求在返回管道中。

Interestingly, the performance benefit you get from going from single-buffering to double, triple, quadruple, etc., is not a linear slope. Implementing double-buffering usually yields almost all of the performance improvement that's there to be had; going

to triple, quadruple, or n-way buffered usually yields little more. This is because what matters is not the sheer quantity of data in the pipeline, but the fact that there issomething in the pipeline following the packet you're currently waiting for.

As long as there's at least a packet's worth of data following the response you're waiting for, that's enough to avoid the pathological slowdown described here. As long as there's at least four or five packet's worth of data, that's enough to trigger afast

retransmit should the the packet you're waiting for be lost.

Case Study: WiFi Performance Testing Program

In this document I describe my latest encounter with this bad interaction between Nagle's Algorithm and Delayed ACK: a testing program used in WiFi conformance testing. This program tests the speed of a WiFi implementation by repeatedly sending 100,000 bytes

of data over TCP and then waiting for an application-layer ack from the other to confirm its reception. Windows achieved the 3.5Mb/s required to pass the test; Mac OS X got just 2.7Mb/s and failed. The naive (and wrong) conclusion would be that Windows is

fast, and Mac OS X is slow, and that's that. The truth is not so simple. The true explanation was that the test was flawed, and Mac OS X happened to expose the problem, while Windows, basically through luck, did not.

在这篇文章中,我描述了我在Nagle算法和延迟的ACK之间的不良互动的最新的遭遇:在WiFi一致性测试使用的测试程序。这个程序测试通过重复发送100K个字节的数据,然后在应用层等待一个对方接受完毕的响应。Windows

平台达到3.5MB/ S通过测试;

Mac OS X中仅仅得到了2.7MB/ S且没有通过测试。天真和错误的结论是,Windows速度快,而Mac OS X是缓慢的,就是这样。事实并非如此简单。真正的解释是,检验的标准是有缺陷的,Mac

OS X刚好引发这个问题,而Windows运气很好没有触发这个问题。

Engineers found that reducing the buffer size from 100,000 bytes to 99,912 bytes made the measured speed jump to 5.2Mb/s, easily passing the test. At 99,913 bytes the test got 2.7Mb/s and failed. Clearly what's going on here is more interesting than just

a slow wireless card and/or driver.

工程师发现把缓冲区从100K改成99912字节可以将速度提高到5.2Mb/s,顺利通过测试。但是在99913的时候还是2.7Mb/s失败。这个问题比研究一个慢的无线网卡或者驱动更有趣。

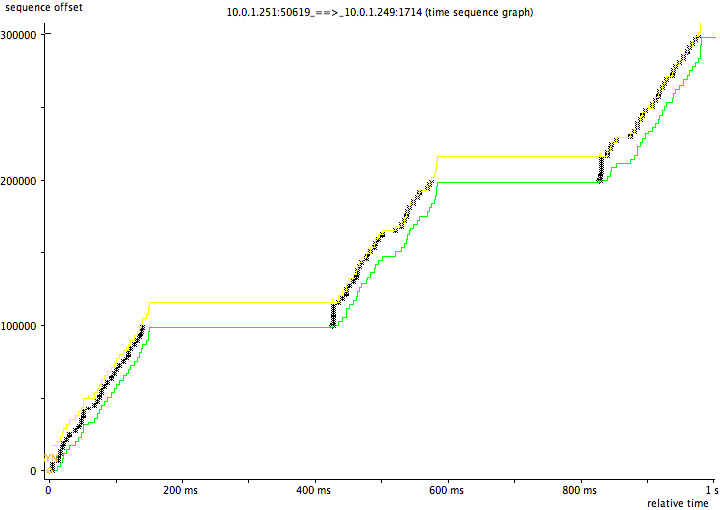

The diagram below shows a TCP packet trace of the failing transfer, which achieves only 2.7Mb/s, generated usingtcptrace and jPlot:

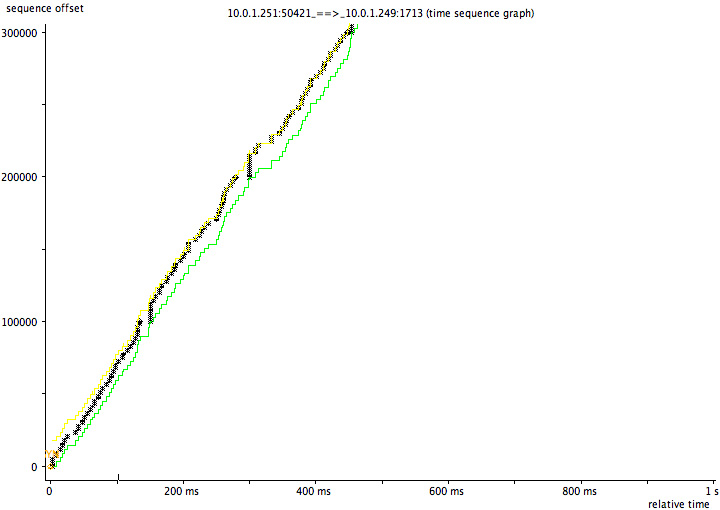

This diagram shows a TCP packet trace of the transfer using 99912-byte blocks, which achieves 5.2Mb/s and passes:

In the failing case the code is clearly sending data successfully from 0-200ms, then doing nothing from 200-400ms, then sending again from 400-600ms, then nothing again from 600-800ms. Why does it keep pausing? To understand that we need to understand Delayed

ACK and Nagle's Algorithm:

在失败的测试中,数据在0到200ms的时候成功发送,然后200-400的时候会阻塞,400-600ms继续发送。600-800ms继续啥也不干。为什么会周期性的阻塞呢?要弄懂这个问题,需要了解Delayed ACK 和 Nagle's Algorithm。

Delayed ACK

Delayed ACK means TCP doesn't immediately ack every single received TCP segment. (When reading the following, think about an interactive ssh session, not bulk transfer.) If you receive a single lone TCP segment, you wait 100-200ms, on the assumption

that the receiving application will probably generate a response of some kind. (E.g., every time sshd receives a keystroke, it typically generates a character echo in response.) You don't want the TCP stack to send an empty ACK followed by a TCP data packet

1ms later, every time, so you delay a little, so you can combine the ACK and data packet into one. So far so good. But what if the application doesn't generate any response data? Well, in that case, what difference can a little delay make? If there's no response

data, then the client can't be waiting for anything, can it? Well, the application-layer client can't be waiting for anything, but the TCP stack at the endcan be waiting: This is where Nagle's Algorithm enters the story:

Delayed ACK 意味着TCP没有立即应答每一个收到的TCP包。(阅读下面的内容时,想象一个互动的SSH会话,而不是批量传输。)如果您收到一个单一的TCP段,你等待100-200ms,这个是假设接收应用程序可能会产生某种形式的反应。(例如,sshd的每一次收到一个按键,它通常会产生一个echo响应。)你不会希望TCP栈发送一个空的ACK包后紧接着又发送一个响应数据包把,所以每一次,延迟一小段时间,这样就可以将ACK包和响应包组合成一个数据包。到目前为止这样子效果还不错。但是,如果应用程序不产生任何响应数据呢?那么,在这种情况下,一点延迟会导致什么情况?如果没有响应数据,然后在客户端不能等待任何数据,是么?是的,应用层客户端不能等待数据包,但TCP协议栈最终还是会等待:Nagle算法:

Nagle's Algorithm

For efficiency you want to send full-sized TCP data packets. Nagle's Algorithm says that if you have a few bytes to send, but not a full packet's worth, and you already have some unacknowledged data in flight, then you wait, until either the application

gives you more data, enough to make another full-sized TCP data packet, or the other end acknowledges all your outstanding data, so you no longer have any data in flight.

为了提高效率,你要发送的全尺寸的TCP数据包。Nagle算法说如果你有一个几个字节发送,但是有不算一个full包,而且你还有一些没有ack的数据在路上,那么你等待,直到应用程序给你更多的数据,多到足够拼成一个full包,或者另一端确认所有待处理的数据,于是没有未确认的数据。

Usually this is a good idea. Nagle's Algorithm is to protect the network from stupid apps that do things like this, where a naive TCP stack might end up sending 100,000 one-byte packets.

大多数时候这是个好主意。Nagle's Algorithm 可以用来保护网络被愚蠢应用折磨,像下面这段代码,原生的TCP栈会发送10万个1字节的包。

for (i=0; i<100000; i++) write(socket, &buffer[i], 1);

The bad interaction is that now there is something at the sending end waiting for that response from the server. That something is Nagle's Algorithm, waiting for its in-flight data to be acknowledged before sending more.

但是当正要发送数据并且要等待服务端的响应的时候,情况就会变得糟糕。这个跟Nagle算法的“等待ACK包在发送更多数据前”有关。

The next thing to know is that Delayed ACK applies to a single packet. If a second packet arrives, an ACK is generated immediately. So TCP will ACK everysecond packet immediately. Send two packets, and you get an immediate ACK. Send three packets,

and you'll get an immediate ACK covering the first two, then a 200ms pause before the ACK for the third.

下一步的事情需要知道Delayed ACK发送了一个单一包后,如果第二个包到达服务端,ACK会立刻生成。所以TCP会给所有第二个包立刻生成ACK。发送2个包,你会立刻获得一个ACK。发送三个包,你会获得前2个包的ACK,然后等待200ms后第三个包的ACK会发出。

Armed with this information, we can now understand what's going on. Let's look at the numbers:

99,900 bytes = 68 full-sized 1448-byte packets, plus 1436 bytes extra

100,000 bytes = 69 full-sized 1448-byte packets, plus 88 bytes extra

With 99,900 bytes, you send 68 full-sized packets. Nagle holds onto the last 1436 bytes. Then:

- Your 68th packet (an even number) is immediately acknowledged by the receiver without delay

- Nagle gets the ACK and releases the remaining 1436 bytes

- The receiver gets the remaining 1436 bytes, and delays its ACK

- the server application generates its one-byte application-layer ack for the transfer,

- Delayed ACK combines that one byte with its pending ACK packet, sends the combined TCP ACK+data packet promptly,

...and everything flows (relatively) smoothly.

在99900字节的情况下,你会发送68个full包。Nagle保持了最后的1436字节。然后

1,68个包立刻都可以收到ACK。

2,Nagle得到了ACK然后发送1436字节剩余的数据。

3,接收方收到剩余的1436字节,延迟它的ACK。

4,服务器生成一个1字节的响应。

5,Delayed ACK 将这个1字节的包和ACK包并成一个包发送出去。

然后一切顺利平滑。

Now consider the 100,000-byte case. You send a stream of 69 full-sized packets. Nagle holds onto the last 88 bytes. Then:

- The receiver ACKs every second packet, up to packet 68.

- One more data packet arrives, packet 69.

- Delayed ACK means that the receiver won't ACK this packet until it gets

(a) some response data from the local process, or

(b) another packet from the sender. - The local process won't generate any response data (a) because it hasn't got the full 100,000 bytes yet.

- The sender won't send the last packet (b) because Nagle won't let it until it gets an ACK from the receiver.

然后考虑100K字节的情况。你发送了69个full包。Nagle保持剩余的88字节。然后:

1,接受方每次ACK2个包,直到68.

2,69包发送出去。

3,Delayed ACK 不会立刻ACK69号包直到:

a,本地进程的某些响应包要发

b,收到另一个包。

4,本地进程不会发送响应,因为他没有收满100K数据。

5,发送方不能再发数据,因为Nagle在未收到前一个ACK是不会发送包的。

这样子就死锁了,性能收到致命的影响。

Now we have a brief deadlock, with performance-killing results:

- Nagle won't send the last bit of data until it gets an ACK

- Delayed ACK won't send that ACK until it gets some response data

- Server process won't generate any response until it gets all the data

- but, Nagle won't send the last bit of data... and so on.

So, at the end of each 100,000-byte transfer we get this little awkward pause. Finally the delayed ack timer goes off and the deadlock un-wedges, until next time. On a gigabit network, all these huge 200ms pauses can be devastating to an application protocol

that runs into this problem. These pauses can limit a request/response application-layer protocol to at most five transactions per second, on a network link where it should be capable of a thousand transactions per second or more. In the case of this specific

attempt at a performance test, it should have been able to, in principle, transfer each 100,000-bytes chunk over a local gigabit Ethernet link in as little as 1ms. Instead, because it stops and waits after each chunk, instead of double-buffering as recommended

above, sending each 100,000-byte chunk takes 1ms + 200ms pause = 201ms, making the test run roughlytwo hundred times slower than it should.

所以每个100K字节传说快结束的时候就会遇到阻塞问题。最终死锁会结束直到经过200ms。

Why didn't Windows suffer this problem?

On Windows the TCP segment size is 1460 bytes. On Mac OS X and other operating systems that add a TCP time-stamp option, the TCP segment size is twelve bytes smaller: 1448 bytes.

What this means is that on Windows, 100,000 bytes is 68 full-sized 1460-byte packets plus 720 extra bytes. Because 68 is an even number, by pure luck the application avoids the Nagle/Delayed ACK interaction.

On Mac OS X, 100,000 bytes is 69 full-sized 1448-byte packets, plus 88 bytes extra. Because 69 is an odd number, Mac OS X exposes the application problem.

A crude way to solve the problem, though still not as efficient as the double-buffering approach, is to make sure the application sends each semantic message using a single large write (either by copying the message data into a contiguous buffer, or by using

a scatter/gather-type write operation like sendmsg) and set the TCP_NODELAY socket option, thereby disabling Nagle's algorithm. This avoids this particular problem, though it can still suffer other problems inherent in not using double buffering — like, if

the last packet of a response gets lost, there are no packets following it to trigger afast retransmit.

为什么windows没有这个问题呢,因为windows的TCP段的大小是1460字节。苹果和其他系统的TCP段大小是1448字节。这样意味着windows系统上,100K字节是68个全包+720剩余字节。因为68是偶数,所以这个测试程序幸运的避免了Nagle/Delayed ACK 问题。