Lwip协议栈的实现目的,无非是要上层用来实现app的socket编程。好,我们就从socket开始。为了兼容性,lwip的socket应该也是提供标准的socket接口函数,恩,没错,在src/include/lwip/socket.h文件中可以看到下面的宏定义:

#if LWIP_COMPAT_SOCKETS

#define accept(a,b,c) lwip_accept(a,b,c)

#define bind(a,b,c) lwip_bind(a,b,c)

#define shutdown(a,b) lwip_shutdown(a,b)

#define closesocket(s) lwip_close(s)

#define connect(a,b,c) lwip_connect(a,b,c)

#define getsockname(a,b,c) lwip_getsockname(a,b,c)

#define getpeername(a,b,c) lwip_getpeername(a,b,c)

#define setsockopt(a,b,c,d,e) lwip_setsockopt(a,b,c,d,e)

#define getsockopt(a,b,c,d,e) lwip_getsockopt(a,b,c,d,e)

#define listen(a,b) lwip_listen(a,b)

#define recv(a,b,c,d) lwip_recv(a,b,c,d)

#define recvfrom(a,b,c,d,e,f) lwip_recvfrom(a,b,c,d,e,f)

#define send(a,b,c,d) lwip_send(a,b,c,d)

#define sendto(a,b,c,d,e,f) lwip_sendto(a,b,c,d,e,f)

#define socket(a,b,c) lwip_socket(a,b,c)

#define select(a,b,c,d,e) lwip_select(a,b,c,d,e)

#define ioctlsocket(a,b,c) lwip_ioctl(a,b,c)

#if LWIP_POSIX_SOCKETS_IO_NAMES

#define read(a,b,c) lwip_read(a,b,c)

#define write(a,b,c) lwip_write(a,b,c)

#define close(s) lwip_close(s)

先不说实际的实现函数,光看这些定义的宏,就是标准socket所必须有的接口。

接着看这些实际的函数实现。这些函数实现在src/api/socket.c中。先看下接受连接的函数,这个是tcp的

原型:int lwip_accept(int s, struct sockaddr *addr, socklen_t *addrlen)

可以看到这里的socket类型参数 s,实际上是个int型

在这个函数中的第一个函数调用是sock = get_socket(s);

这里的sock变量类型是lwip_socket,定义如下:

/** Contains all internal pointers and states used for a socket */

struct lwip_socket {

/** sockets currently are built on netconns, each socket has one netconn */

struct netconn *conn;

/** data that was left from the previous read */

struct netbuf *lastdata;

/** offset in the data that was left from the previous read */

u16_t lastoffset;

/** number of times data was received, set by event_callback(),

tested by the receive and select functions */

u16_t rcvevent;

/** number of times data was received, set by event_callback(),

tested by select */

u16_t sendevent;

/** socket flags (currently, only used for O_NONBLOCK) */

u16_t flags;

/** last error that occurred on this socket */

int err;

};

好,这个结构先不管它,接着看下get_socket函数的实现【也是在src/api/socket.c文件中】,在这里我们看到这样一条语句sock = &sockets[s];很明显,返回值也是这个sock,它是根据传进来的序列号在sockets数组中找到对应的元素并返回该元素的地址。好了,那么这个sockets数组是在哪里被赋值了这些元素的呢?

进行到这里似乎应该从标准的socket编程的开始,也就是socket函数讲起,那我们就顺便看一下。它对应的实际实现是下面这个函数

Int lwip_socket(int domain, int type, int protocol)【src/api/socket.c】

这个函数根据不同的协议类型,也就是函数中的type参数,创建了一个netconn结构体的指针,接着就是用这个指针作为参数调用了alloc_socket函数,下面具体看下这个函数的实现

static int alloc_socket(struct netconn *newconn)

{

int i;

/* Protect socket array */

sys_sem_wait(socksem);

/* allocate a new socket identifier */

for (i = 0; i < NUM_SOCKETS; ++i) {

if (!sockets[i].conn) {

sockets[i].conn = newconn;

sockets[i].lastdata = NULL;

sockets[i].lastoffset = 0;

sockets[i].rcvevent = 0;

sockets[i].sendevent = 1; /* TCP send buf is empty */

sockets[i].flags = 0;

sockets[i].err = 0;

sys_sem_signal(socksem);

return i;

}

}

sys_sem_signal(socksem);

return -1;

}

对了,就是这个时候对全局变量sockets数组的元素赋值的。

既然都来到这里了,那就顺便看下netconn结构的情况吧。它的学名叫netconn descriptor

/** A netconn descriptor */

struct netconn

{

/** type of the netconn (TCP, UDP or RAW) */

enum netconn_type type;

/** current state of the netconn */

enum netconn_state state;

/** the lwIP internal protocol control block */

union {

struct ip_pcb *ip;

struct tcp_pcb *tcp;

struct udp_pcb *udp;

struct raw_pcb *raw;

} pcb;

/** the last error this netconn had */

err_t err;

/** sem that is used to synchroneously execute functions in the core context */

sys_sem_t op_completed;

/** mbox where received packets are stored until they are fetched

by the netconn application thread (can grow quite big) */

sys_mbox_t recvmbox;

/** mbox where new connections are stored until processed

by the application thread */

sys_mbox_t acceptmbox;

/** only used for socket layer */

int socket;

#if LWIP_SO_RCVTIMEO

/** timeout to wait for new data to be received

(or connections to arrive for listening netconns) */

int recv_timeout;

#endif /* LWIP_SO_RCVTIMEO */

#if LWIP_SO_RCVBUF

/** maximum amount of bytes queued in recvmbox */

int recv_bufsize;

#endif /* LWIP_SO_RCVBUF */

u16_t recv_avail;

/** TCP: when data passed to netconn_write doesn't fit into the send buffer,

this temporarily stores the message. */

struct api_msg_msg *write_msg;

/** TCP: when data passed to netconn_write doesn't fit into the send buffer,

this temporarily stores how much is already sent. */

int write_offset;

#if LWIP_TCPIP_CORE_LOCKING

/** TCP: when data passed to netconn_write doesn't fit into the send buffer,

this temporarily stores whether to wake up the original application task

if data couldn't be sent in the first try. */

u8_t write_delayed;

#endif /* LWIP_TCPIP_CORE_LOCKING */

/** A callback function that is informed about events for this netconn */

netconn_callback callback;

};【src/include/lwip/api.h】

到此,对这个结构都有些什么,做了一个大概的了解。

下面以SOCK_STREAM类型为例,看下netconn的new过程:

在lwip_socket函数中有

case SOCK_DGRAM:

conn = netconn_new_with_callback( (protocol == IPPROTO_UDPLITE) ?

NETCONN_UDPLITE : NETCONN_UDP, event_callback);

#define netconn_new_with_callback(t, c) netconn_new_with_proto_and_callback(t, 0, c)

简略实现如下:

struct netconn*

netconn_new_with_proto_and_callback(enum netconn_type t, u8_t proto, netconn_callback callback)

{

struct netconn *conn;

struct api_msg msg;

conn = netconn_alloc(t, callback);

if (conn != NULL )

{

msg.function = do_newconn;

msg.msg.msg.n.proto = proto;

msg.msg.conn = conn;

TCPIP_APIMSG(&msg);

}

return conn;

}

主要就看TCPIP_APIMSG了,这个宏有两个定义,一个是LWIP_TCPIP_CORE_LOCKING的,一个非locking的。分别分析这两个不同类型的函数

* Call the lower part of a netconn_* function

* This function has exclusive access to lwIP core code by locking it

* before the function is called.

err_t tcpip_apimsg_lock(struct api_msg *apimsg)【这个是可以locking的】

{

LOCK_TCPIP_CORE();

apimsg->function(&(apimsg->msg));

UNLOCK_TCPIP_CORE();

return ERR_OK;

}

* Call the lower part of a netconn_* function

* This function is then running in the thread context

* of tcpip_thread and has exclusive access to lwIP core code.

err_t tcpip_apimsg(struct api_msg *apimsg)【此为非locking的】

{

struct tcpip_msg msg;

if (mbox != SYS_MBOX_NULL) {

msg.type = TCPIP_MSG_API;

msg.msg.apimsg = apimsg;

sys_mbox_post(mbox, &msg);

sys_arch_sem_wait(apimsg->msg.conn->op_completed, 0);

return ERR_OK;

}

return ERR_VAL;

}

其实,功能都是一样的,都是要对apimsg->function函数的调用。只是途径不一样而已。看看它们的功能说明就知道了。这么来说apimsg->function的调用很重要了。从netconn_new_with_proto_and_callback函数的实现,可以知道这个function就是do_newconn

Void do_newconn(struct api_msg_msg *msg)

{

if(msg->conn->pcb.tcp == NULL) {

pcb_new(msg);

}

/* Else? This "new" connection already has a PCB allocated. */

/* Is this an error condition? Should it be deleted? */

/* We currently just are happy and return. */

TCPIP_APIMSG_ACK(msg);

}

还是看TCP的,在pcb_new函数中有如下代码:

case NETCONN_TCP:

msg->conn->pcb.tcp = tcp_new();

if(msg->conn->pcb.tcp == NULL) {

msg->conn->err = ERR_MEM;

break;

}

setup_tcp(msg->conn);

break;

我们知道在这里建立了这个tcp的连接。至于这个超级牛的函数,以后再做介绍。

嗯,还是回过头来接着看accept函数吧。

Sock获得了,接着就是newconn = netconn_accept(sock->conn);通过mbox取得新的连接。粗略的估计了一下,这个新的连接应该和listen有关系。那就再次打断一下,看看那个listen操作。

lwip_listen --à netconn_listen_with_backlog--à do_listen--à

tcp_arg(msg->conn->pcb.tcp, msg->conn);

tcp_accept(msg->conn->pcb.tcp, accept_function);//注册了一个接受函数

* Accept callback function for TCP netconns.

* Allocates a new netconn and posts that to conn->acceptmbox.

static err_t accept_function(void *arg, struct tcp_pcb *newpcb, err_t err)

{

struct netconn *newconn;

struct netconn *conn;

conn = (struct netconn *)arg;

/* We have to set the callback here even though

* the new socket is unknown. conn->socket is marked as -1. */

newconn = netconn_alloc(conn->type, conn->callback);

if (newconn == NULL) {

return ERR_MEM;

}

newconn->pcb.tcp = newpcb;

setup_tcp(newconn);

newconn->err = err;

/* Register event with callback */

API_EVENT(conn, NETCONN_EVT_RCVPLUS, 0);

if (sys_mbox_trypost(conn->acceptmbox, newconn) != ERR_OK)

{

/* When returning != ERR_OK, the connection is aborted in tcp_process(),

so do nothing here! */

newconn->pcb.tcp = NULL;

netconn_free(newconn);

return ERR_MEM;

}

return ERR_OK;

}

对了,accept函数中从mbox中获取的连接就是这里放进去的。

再回到accept中来,取得了新的连接,接下来就是分配sock了,再然后,再然后?再然后就等用户来使用接收、发送数据了。

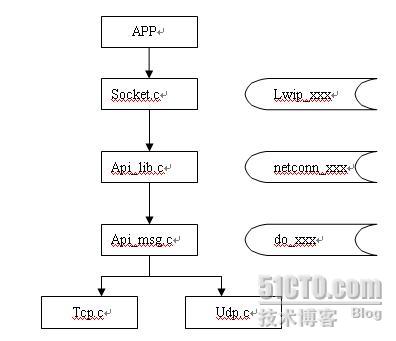

到此整个APP层,也就是传输层以上对socket的封装讲完了。在最后再总结一些整个路径的调用情况吧

本文出自 “bluefish” 博客,请务必保留此出处http://bluefish.blog.51cto.com/214870/158413