from: http://en.wikipedia.org/wiki/Binary_search_tree

http://zh.wikipedia.org/wiki/二元搜索树

Binary search tree

|

|

||

|---|---|---|

| Type | Tree | |

|

Time complexity in big O notation |

||

| Average | Worst case | |

| Space | O(n) | O(n) |

| Search | O(log n) | O(n) |

| Insert | O(log n) | O(n) |

| Delete | O(log n) | O(n) |

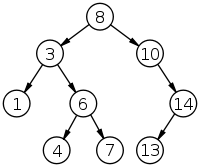

In computer science, a binary

search tree (BST), which may sometimes also be called an ordered or sorted binary tree, is a node-based binary

tree data structurewhich has the following properties:[1]

- The left subtree of a node contains only nodes

with keys less than the node's key. - The right subtree of a node contains only nodes with keys greater than the node's key.

- Both the left and right subtrees must also be binary search trees.

Generally, the information represented by each node is a record rather than a single data element. However, for sequencing purposes, nodes are compared according to their keys rather than any

part of their associated records.

The major advantage of binary search trees over other data structures is that the related sorting

algorithms and search algorithms such as in-order

traversal can be very efficient.

Binary search trees are a fundamental data

structure used to construct more abstract data structures such as sets, multisets,

and associative arrays.

Contents[hide] |

[edit]Operations

Operations on a binary search tree require comparisons between nodes. These comparisons are made with calls to a comparator, which is a subroutine that

computes the total order (linear order) on any two values. This comparator can be explicitly or implicitly defined, depending on the language in which the BST is implemented.

[edit]Searching

Searching a binary search tree for a specific value can be a recursive or iterative process.

This explanation covers a recursive method.

We begin by examining the root

node. If the tree is null, the value we are searching for does not exist in the tree. Otherwise, if the value equals the root, the search is successful. If the value is less than the root, search the left subtree. Similarly, if it is greater than the root,

search the right subtree. This process is repeated until the value is found or the indicated subtree is null. If the searched value is not found before a null subtree is reached, then the item must not be present in the tree.

Here is the search algorithm in the Python

programming language:

# 'node' refers to the parent-node in this case def search_binary_tree(node, key): if node is None: return None # key not found if key < node.key: return search_binary_tree(node.leftChild, key) elif key > node.key: return search_binary_tree(node.rightChild, key) else: # key is equal to node key return node.value # found key

… or equivalent Haskell:

searchBinaryTree _ NullNode = Nothing searchBinaryTree key (Node nodeKey nodeValue (leftChild, rightChild)) = case compare key nodeKey of LT -> searchBinaryTree key leftChild GT -> searchBinaryTree key rightChild EQ -> Just nodeValue

This operation requires O(log n)

time in the average case, but needs O(n) time in the worst case, when the unbalanced tree

resembles a linked list (degenerate

tree).

Assuming that BinarySearchTree is a class with a member function "search(int)" and a pointer to the root node, the algorithm is also easily implemented in terms of an iterative approach. The

algorithm enters a loop, and decides whether to branch left or right depending on the value of the node at each parent node.

bool BinarySearchTree::search(int val) { Node *next = this->root(); while (next != NULL) { if (val == next->value()) { return true; } else if (val < next->value()) { next = next->left(); } else { next = next->right(); } } //not found return false; }

[edit]Insertion

Insertion begins as a search would begin; if the root is not equal to the value, we search the left or right subtrees as before. Eventually, we will reach an external node and add the value

as its right or left child, depending on the node's value. In other words, we examine the root and recursively insert the new node to the left subtree if the new value is less than the root, or the right subtree if the new value is greater than or equal to

the root.

Here's how a typical binary search tree insertion might be performed in C++:

/* Inserts the node pointed to by "newNode" into the subtree rooted at "treeNode" */ void InsertNode(Node* &treeNode, Node *newNode) { if (treeNode == NULL) treeNode = newNode; else if (newNode->key < treeNode->key) InsertNode(treeNode->left, newNode); else InsertNode(treeNode->right, newNode); }

The above "destructive" procedural variant modifies the tree in place. It uses only constant space, but the previous version of the tree is lost. Alternatively, as in the following Python example,

we can reconstruct all ancestors of the inserted node; any reference to the original tree root remains valid, making the tree a persistent

data structure:

def binary_tree_insert(node, key, value): if node is None: return TreeNode(None, key, value, None) if key == node.key: return TreeNode(node.left, key, value, node.right) if key < node.key: return TreeNode(binary_tree_insert(node.left, key, value), node.key, node.value, node.right) else: return TreeNode(node.left, node.key, node.value, binary_tree_insert(node.right, key, value))

The part that is rebuilt uses Θ(log n) space in the average case and O(n) in the worst case (see big-O

notation).

In either version, this operation requires time proportional to the height of the tree in the worst case, which is O(log n)

time in the average case over all trees, but O(n) time in the worst case.

Another way to explain insertion is that in order to insert a new node in the tree, its value is first compared with the value of the root. If its value is less than the root's, it is then

compared with the value of the root's left child. If its value is greater, it is compared with the root's right child. This process continues, until the new node is compared with a leaf node, and then it is added as this node's right or left child, depending

on its value.

There are other ways of inserting nodes into a binary tree, but this is the only way of inserting nodes at the leaves and at the same time preserving the BST structure.

Here is an iterative approach to inserting into a binary search tree in Java:

private Node m_root; public void insert(int data) { if (m_root == null) { m_root = new TreeNode(data, null, null); return; } Node root = m_root; while (root != null) { // Not the same value twice if (data == root.getData()) { return; } else if (data < root.getData()) { // insert left if (root.getLeft() == null) { root.setLeft(new TreeNode(data, null, null)); return; } else { root = root.getLeft(); } } else { // insert right if (root.getRight() == null) { root.setRight(new TreeNode(data, null, null)); return; } else { root = root.getRight(); } } } }

Below is a recursive approach to the insertion method.

private Node m_root; public void insert(int data){ if (m_root == null) { m_root = TreeNode(data, null, null); }else{ internalInsert(m_root, data); } } private static void internalInsert(Node node, int data){ // Not the same value twice if (data == node.getValue()) { return; } else if (data < node.getValue()) { if (node.getLeft() == null) { node.setLeft(new TreeNode(data, null, null)); }else{ internalInsert(node.getLeft(), data); } }else{ if (node.getRight() == null) { node.setRight(new TreeNode(data, null, null)); }else{ internalInsert(node.getRight(), data); } } }

[edit]Deletion

There are three possible cases to consider:

- Deleting a leaf (node with no children): Deleting a leaf is easy, as we can simply remove it from the tree.

- Deleting a node with one child: Remove the node and replace it with its child.

- Deleting a node with two children: Call the node to be deleted N. Do not delete N. Instead, choose either its in-order successor

node or its in-order predecessor node, R. Replace the value of N with the value ofR, then delete R.

As with all binary trees, a node's in-order successor is the left-most child of its right subtree, and a node's in-order predecessor is the right-most child of its left subtree. In either case,

this node will have zero or one children. Delete it according to one of the two simpler cases above.

Consistently using the in-order successor or the in-order predecessor for every instance of the two-child case can lead to an unbalanced tree,

so good implementations add inconsistency to this selection.

Running Time Analysis: Although this operation does not always traverse the tree down to a leaf, this is always a possibility; thus in the worst case it requires time proportional to the height

of the tree. It does not require more even when the node has two children, since it still follows a single path and does not visit any node twice.

Here is the code in Python:

def findMin(self): ''' Finds the smallest element that is a child of *self* ''' current_node = self while current_node.left_child: current_node = current_node.left_child return current_node def replace_node_in_parent(self, new_value=None): ''' Removes the reference to *self* from *self.parent* and replaces it with *new_value*. ''' if self.parent: if self == self.parent.left_child: self.parent.left_child = new_value else: self.parent.right_child = new_value if new_value: new_value.parent = self.parent def binary_tree_delete(self, key): if key < self.key: self.left_child.binary_tree_delete(key) elif key > self.key: self.right_child.binary_tree_delete(key) else: # delete the key here if self.left_child and self.right_child: # if both children are present # get the smallest node that's bigger than *self* successor = self.right_child.findMin() self.key = successor.key # if *successor* has a child, replace it with that # at this point, it can only have a *right_child* # if it has no children, *right_child* will be "None" successor.replace_node_in_parent(successor.right_child) elif self.left_child or self.right_child: # if the node has only one child if self.left_child: self.replace_node_in_parent(self.left_child) else: self.replace_node_in_parent(self.right_child) else: # this node has no children self.replace_node_in_parent(None)

Source code in C++ (from http://www.algolist.net/Data_structures/Binary_search_tree).

This URL also explains the operation nicely using diagrams.

bool BinarySearchTree::remove(int value) { if (root == NULL) return false; else { if (root->getValue() == value) { BSTNode auxRoot(0); auxRoot.setLeftChild(root); BSTNode* removedNode = root->remove(value, &auxRoot); root = auxRoot.getLeft(); if (removedNode != NULL) { delete removedNode; return true; } else return false; } else { BSTNode* removedNode = root->remove(value, NULL); if (removedNode != NULL) { delete removedNode; return true; } else return false; } } } BSTNode* BSTNode::remove(int value, BSTNode *parent) { if (value < this->value) { if (left != NULL) return left->remove(value, this); else return NULL; } else if (value > this->value) { if (right != NULL) return right->remove(value, this); else return NULL; } else { if (left != NULL && right != NULL) { this->value = right->minValue(); return right->remove(this->value, this); } else if (parent->left == this) { parent->left = (left != NULL) ? left : right; return this; } else if (parent->right == this) { parent->right = (left != NULL) ? left : right; return this; } } } int BSTNode::minValue() { if (left == NULL) return value; else return left->minValue(); }

[edit]Traversal

Once the binary search tree has been created, its elements can be retrieved in-order by recursively traversing

the left subtree of the root node, accessing the node itself, then recursively traversing the right subtree of the node, continuing this pattern with each node in the tree as it's recursively accessed. As with all binary trees, one may conduct a pre-order

traversal or a post-order traversal, but neither are likely to

be useful for binary search trees.

The code for in-order traversal in Python is given below. It will call callback for every node in the tree.

def traverse_binary_tree(node, callback): if node is None: return traverse_binary_tree(node.leftChild, callback) callback(node.value) traverse_binary_tree(node.rightChild, callback)

Traversal requires Ω(n) time,

since it must visit every node. This algorithm is also O(n), so it is asymptotically

optimal.

Template:In

Order Traversal in Binary Search Tree without Recursion

The Code for in-order traversal in Language C is given below.

void InOrderTraversal(struct Node *n) { struct Node *Cur, *Pre; if(n==NULL) return; Cur = n; while(Cur != NULL) { if(Cur->lptr == NULL) { printf("\t%d",Cur->val); Cur= Cur->rptr; } else { Pre = Cur->lptr; while(Pre->rptr !=NULL && Pre->rptr != Cur) Pre = Pre->rptr; if (Pre->rptr == NULL) { Pre->rptr = Cur; Cur = Cur->lptr; } else { Pre->rptr = NULL; printf("\t%d",Cur->val); Cur = Cur->rptr; } } } }

[edit]Sort

A binary search tree can be used to implement a simple but efficient sorting

algorithm. Similar to heapsort, we insert all the values we wish to sort into a new ordered data structure—in

this case a binary search tree—and then traverse it in order, building our result:

def build_binary_tree(values): tree = None for v in values: tree = binary_tree_insert(tree, v) return tree def get_inorder_traversal(root): ''' Returns a list containing all the values in the tree, starting at *root*. Traverses the tree in-order(leftChild, root, rightChild). ''' result = [] traverse_binary_tree(root, lambda element: result.append(element)) return result

The worst-case time of build_binary_tree is O(n2)—if

you feed it a sorted list of values, it chains them into a linked list with no left subtrees. For example, build_binary_tree([1, yields the tree

2, 3, 4, 5])(1 (2 (3 (4 (5))))).

There are several schemes for overcoming this flaw with simple binary trees; the most common is the self-balancing

binary search tree. If this same procedure is done using such a tree, the overall worst-case time isO(nlog n),

which is asymptotically optimal for a comparison

sort. In practice, the poor cache performance and added overhead in time and space for a tree-based sort

(particularly for node allocation) make it inferior to other

asymptotically optimal sorts such as heapsort for static list sorting. On the other hand, it is one of the most

efficient methods of incremental sorting, adding items to a list over time while keeping the list sorted at all times.

[edit]Types

There are many types of binary search trees. AVL

trees and red-black trees are both forms of self-balancing

binary search trees. A splay tree is a binary search tree that automatically moves frequently accessed

elements nearer to the root. In a treap ("tree heap"),

each node also holds a (randomly chosen) priority and the parent node has higher priority than its children. Tango

Trees are trees optimized for fast searches.

Two other titles describing binary search trees are that of a complete and degenerate tree.

A complete tree is a tree with n levels, where for each level d <= n - 1, the number of existing nodes at level d is equal to 2d. This means all possible

nodes exist at these levels. An additional requirement for a complete binary tree is that for the nth level, while every node does not have to exist, the nodes that do exist must fill from left to right.

A degenerate tree is a tree where for each parent node, there is only one associated child node. What this means is that in a performance measurement, the tree will essentially behave like

a linked list data structure.

[edit]Performance

comparisons

D. A. Heger (2004)[2] presented

a performance comparison of binary search trees. Treap was found to have the best average performance, while red-black

tree was found to have the smallest amount of performance fluctuations.

[edit]Optimal

binary search trees

If we don't plan on modifying a search tree, and we know exactly how often each item will be accessed, we can construct an optimal binary search tree, which is a search tree

where the average cost of looking up an item (the expected search cost) is minimized.

Even if we only have estimates of the search costs, such a system can considerably speed up lookups on average. For example, if you have a BST of English words used in a spell

checker, you might balance the tree based on word frequency in text corpora, placing words like "the"

near the root and words like "agerasia" near the leaves. Such a tree might be compared with Huffman

trees, which similarly seek to place frequently-used items near the root in order to produce a dense information encoding; however, Huffman trees only store data elements in leaves and these elements need not be ordered.

If we do not know the sequence in which the elements in the tree will be accessed in advance, we can use splay

trees which are asymptotically as good as any static search tree we can construct for any particular sequence of lookup operations.

Alphabetic trees are Huffman trees with the additional constraint on order, or, equivalently, search trees with the modification that all elements are stored in the leaves.

Faster algorithms exist for optimal alphabetic binary trees (OABTs).

Example:

procedure Optimum Search Tree(f, f´, c):

for j = 0 to n do

c[j, j] = 0, F[j, j] = f´j

for d = 1 to n do

for i = 0 to (n − d) do

j = i + d

F[i, j] = F[i, j − 1] + f´ + f´j

c[i, j] = MIN(i<k<=j){c[i, k − 1] + c[k, j]} + F[i, j]

[edit]See

also

[edit]References

- ^ Gilberg,

R.; Forouzan, B. (2001), "8", Data Structures: A Pseudocode Approach With C++, Pacific Grove, CA: Brooks/Cole, p. 339, ISBN 0-534-95216-X - ^ Heger,

Dominique A. (2004), "A Disquisition on The Performance Behavior

of Binary Search Tree Data Structures", European Journal for the Informatics Professional 5 (5): 67–75

[edit]Further

reading

- Donald Knuth. The Art of Computer Programming, Volume 3: Sorting

and Searching, Third Edition. Addison-Wesley, 1997. ISBN 0-201-89685-0.

Section 6.2.2: Binary Tree Searching, pp. 426–458. - Thomas H. Cormen, Charles

E. Leiserson, Ronald L. Rivest, and Clifford

Stein. Introduction to Algorithms, Second Edition. MIT Press and

McGraw-Hill, 2001. ISBN 0-262-03293-7. Chapter 12: Binary search trees,

pp. 253–272. Section 15.5: Optimal binary search trees, pp. 356–363.

[edit]External

links

- Binary

Search Tree C++ and Pascal - Binary Search Trees Animation

- Full source code to an efficient

implementation in C++ - Implementation of a Persistent Binary Search

Tree in C - Iterative Implementation of Binary

Search Trees in C# - An introduction to binary trees from Stanford

- Dictionary of Algorithms and

Data Structures - Binary Search Tree - Binary Search Tree Example in Python

- Interactive Data Structure Visualizations

- Binary Tree Traversals - Literate implementations

of binary search trees in various languages on LiteratePrograms - BST Tree Applet by Kubo Kovac

- Well-illustrated explanation

of binary search tree. Implementations in Java and C++ - Teacing Binary

Search Tree through visualization - Elastic Binary Trees (ebtree) - description and

implementation in C.

二元搜尋樹

二叉查找树(Binary Search Tree),或者是一棵空树,或者是具有下列性质的二叉树:

- 若它的左子树不空,则左子树上所有结点的值均小于它的根结点的值;

- 若它的右子树不空,则右子树上所有结点的值均大于它的根结点的值;

- 它的左、右子树也分别为二叉排序树。

二叉排序树的查找过程和次优二叉树类似,通常采取二叉链表作为二叉排序树的存储结构。中序遍历二叉排序树可得到一个关键字的有序序列,一个无序序列可以通过构造一棵二叉排序树变成一个有序序列,构造树的过程即为对无序序列进行排序的过程。每次插入的新的结点都是二叉排序树上新的叶子结点,在进行插入操作时,不必移动其它结点,只需改动某个结点的指针,由空变为非空即可。搜索,插入,删除的复杂度等于树高,期望O(logn),最坏O(n)(数列有序,树退化成线性表).

虽然二叉排序树的最坏效率是O(n),但它支持动态查询,且有很多改进版的二叉排序树可以使树高为O(logn),如SBT,AVL,红黑树等.故不失为一种好的动态排序方法.

目录[隐藏] |

[编辑]二元排序樹的查找算法

在二元排序樹b中查找x的過程為:

- 若b是空樹,則搜索失敗,否則:

- 若x等於b的根節點的數據域之值,則查找成功;否則:

- 若x小於b的根節點的數據域之值,則搜索左子樹;否則:

- 查找右子树。

/* 以下代码为C++写成, 下同 */ Status SearchBST(BiTree T, KeyType key, BiTree f, BiTree &p){ //在根指针T所指二元排序樹中递归地查找其關键字等於key的數據元素,若查找成功, //則指针p指向該數據元素節點,并返回TRUE,否則指针指向查找路徑上訪問的最後 //一個節點并返回FALSE,指针f指向T的雙親,其初始调用值為NULL if(!T) { //查找不成功 p=f; return false; } else if (key == T->data.key) { //查找成功 p=T; return true; } else if (key < T->data.key) //在左子樹中繼續查找 return SearchBST(T->lchild, key, T, p); else //在右子樹中繼續查找 return SearchBST(T->rchild, key, T, p); }

[编辑]在二叉排序树插入结点的算法

向一个二叉排序树b中插入一个结点s的算法,过程为:

- 若b是空树,则将s所指结点作为根结点插入,否则:

- 若s->data等于b的根结点的数据域之值,则返回,否则:

- 若s->data小于b的根结点的数据域之值,则把s所指结点插入到左子树中,否则:

- 把s所指结点插入到右子树中。

/*当二叉排序树T中不存在关键字等于e.key的数据元素时,插入e并返回TRUE,否则返回FALSE*/ Status InsertBST(BiTree &T, ElemType e){ if(!SearchBST(T, e.key, NULL,p){ s = new BiTNode; s->data = e; s->lchild = s->rchild = NULL; if(!p) T=s; //被插结点*s为新的根结点 else if (e.key < p->data.key) p->lchld = s; //被子插结点*s为左孩子 else p->rchild = s; //被插结点*s为右孩子 return true; } else return false; //树中已有关键字相同的结点,不再插入 } }

[编辑]在二叉排序树删除结点的算法

在二叉排序树删去一个结点,分三种情况讨论:

- 若*p结点为叶子结点,即PL(左子树)和PR(右子树)均为空树。由于删去叶子结点不破坏整棵树的结构,则只需修改其双亲结点的指针即可。

- 若*p结点只有左子树PL或右子树PR,此时只要令PL或PR直接成为其双亲结点*f的左子树(当*p是左子树)或右子树(当*p是右子树)即可,作此修改也不破坏二叉排序树的特性。

- 若*p结点的左子树和右子树均不空。在删去*p之后,为保持其它元素之间的相对位置不变,可按中序遍历保持有序进行调整,可以有两种做法:其一是令*p的左子树为*f的左子树,*s为*f左子树的最右下的结点,而*p的右子树为*s的右子树;其二是令*p的直接前驱(或直接后继)替代*p,然后再从二叉排序树中删去它的直接前驱(或直接后继)。在二叉排序树上删除一个结点的算法如下:

Status DeleteBST(BiTree &T, KeyType key){ //若二叉排序树T中存在关键字等于key的数据元素时,则删除该数据元素,并返回 //TRUE;否则返回FALSE if(!T) return false; //不存在关键字等于key的数据元素 else{ if(key == T->data.key) { // 找到关键字等于key的数据元素 return Delete(T); } else if(key > T->data.key) return DeleteBST(T->lchild, key); else return DeleteBST(T->rchild, key); } } Status Delete(BiTree &p){ //从二叉排序树中删除结点p,并重接它的左或右子树 if(!p->rchild){ //右子树空则只需重接它的左子树 q=p; p=p->lchild; delete q; } else if(!p->lchild){ //左子树空只需重接它的右子树 q=p; p=p->rchild; delete q; } else{ //左右子树均不空 q=p; s=p->lchild; while(s->rchild){ q=s; s=s->rchild; } //转左,然后向右到尽头 p->data = s->data; //s指向被删结点的“前驱” if(q!=p) q->rchild = s->lchild; //重接*q的右子树 else q->lchild = s->lchild; //重接*q的左子树 delete s; } return true; }

[编辑]二叉排序树性能分析

每个结点的Ci为该结点的层次数。最坏情况下,当先后插入的关键字有序时,构成的二叉排序树蜕变为单支树,树的深度为n,其平均查找长度为 (和顺序查找相同),最好的情况是二叉排序树的形态和折半查找的判定树相同,其平均查找长度和log 2(n)成正比(O(log 2(n)))。

(和顺序查找相同),最好的情况是二叉排序树的形态和折半查找的判定树相同,其平均查找长度和log 2(n)成正比(O(log 2(n)))。

[编辑]二叉排序树的优化

请参见主条目平衡树。

- Size Balanced Tree(SBT)

- AVL树

- 红黑树

- Treap(Tree+Heap)

这些均可以使查找树的高度为O(log(n))