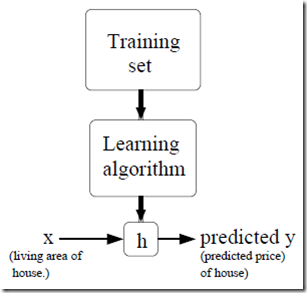

supervised learning

learn a function h : X → Y

h is called a hypothesis.

一、Linear Regression

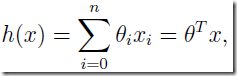

例子中,x是二维向量,x1代表living area,x2代表bedrooms

functions/hypotheses h

设x0 = 1,变换得

Now, given a training set, how do we pick, or learn, the parameters θ?现在变为求参数θ

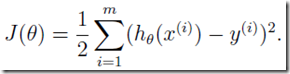

One reasonable method seems to be to make h(x) close to y,

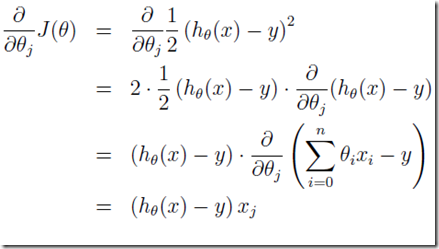

We define the cost function:定义损失函数:

1、LMS algorithm:Least mean square

We want to choose θ so as to minimize J(θ).

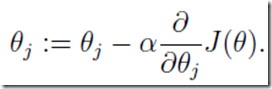

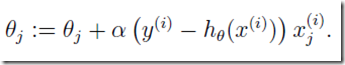

gradient descent algorithm

α is called the learning rate.

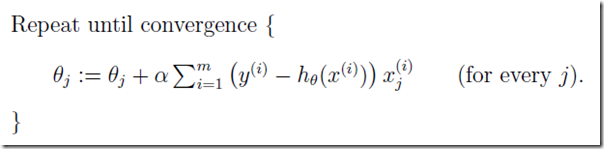

called batch gradient descent

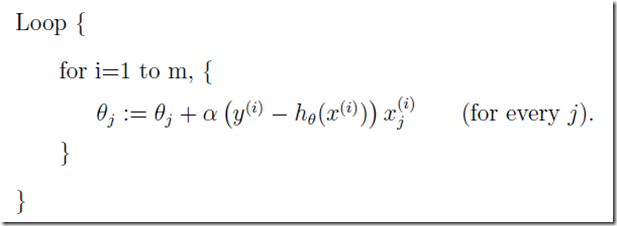

算法:

每次循环中每个θ,比如θj都要更新m次,i=1,2,…m,m为训练集元素个数。

如果m太大,则这个算法会很慢,改用随机梯度下降法