Ransac是一种非常简单的算法

用于在一群样本中去掉噪声样本,得到有效的样本

采用随机抽样验证的方法

以下节选自wikipedia,选有用的贴了过来

RANSAC

RANSAC is an abbreviation for "RANdom SAmple Consensus". It is an algorithm to estimate parameters of a mathematical model from a set of observed data which contains outliers. The algorithm was first published by Fischler and Bolles in 1981.

A basic assumption is that the data consists of "inliers", i.e., data points which can be explained by some set of model parameters, and "outliers" which are data points that do not fit the model. In addition to this, the data points can be subject to noise. The outliers can come, e.g., from extreme values of the noise or from erroneous measurements or incorrect hypotheses about the interpretation of data. RANSAC also assumes that, given a (usually small) set of inliers, there exists a procedure which can estimate the parameters of a model that optimally explains or fits this data.

Example

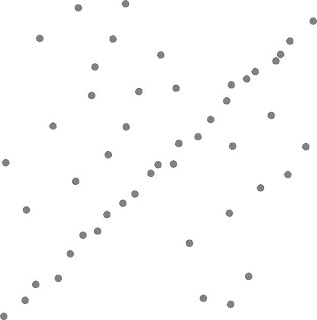

A simple example is fitting of a 2D line to set of observations. Assuming that this set contains both inliers, i.e., points which approximately can be fitted to a line, and outliers, points which cannot be fitted to this line, a simple least squares method for line fitting will in general produce a line with a bad fit to the inliers. The reason is that it is optimally fitted to all points, including the outliers. RANSAC, on the other hand, can produce a model which is only computed from the inliers, provided that the probability of choosing only inliers in the selection of data points is sufficiently high. There is no guarantee for this situation, however, and there are a number of algorithm parameters which must be carefully chosen to keep the level of probability reasonably high.

Overview

The input to the RANSAC algorithm is a set of observed data values, a parameterized model which can explain or be fitted to the observations, and some confidence parameters.

RANSAC achieves its goal by iteratively selecting a random subset of the original data points. These points are hypothetical inliers and this hypothesis is then tested as follows. A model is fitted to the hypothetical inliers, that is, all free parameters of the model are reconstructed from the point set. All other data points are then tested against the fitted model, that is, for every point of the remaining set, the algorithm determines how well the point fits to the estimated model. If it fits well, that point is also considered as a hypothetical inlier. If sufficiently many points have been classified as hypothetical inliers relative to the estimated model, then we have a model which is reasonably good. However, it has only been estimated from the initial set of hypothetical inliers, so we reestimate the model from the entire set of point's hypothetical inliers. At the same time, we also estimate the error of the inliers relative to the model.

This procedure is then repeated a fixed number of times, each time producing either a model which is rejected because too few points are classified as inliers or a refined model together with a corresponding error measure. In the latter case, we keep the refined model if its error is lower than the last saved model.

Algorithm

The generic RANSAC algorithm works as follows:

input:

data - a set of observed data points

model - a model that can be fitted to data points

n - the minimum number of data values required to fit the model

k - the maximum number of iterations allowed in the algorithm

t - a threshold value for determining when a data point fits a model

d - the number of close data values required to assert that a model fits well to data

output:

bestfit - model parameters which best fit the data (or nil if no good model is found)

iterations := 0

bestfit := nil

besterr := infinity

while iterations <> d

(this implies that we may have found a good model now test

how good it is)

bettermodel := model parameters fitted to all points in maybeinliers and alsoinliers

thiserr := a measure of how well model fits these points

if thiserr <>

increment iterations

return bestfit

While the parameter values of t and d have to be calculated from the individual requirements it can be experimentally determined. The interesting parameter of the RANSAC algorithm is k.

To calculate the parameter k given the known probability w of a good data value, the probability z of seeing only bad data values is used:

which leads to

To gain additional confidence, the standard deviation or multiples thereof can be added to k. The standard deviation of k is defined as

A common case is that w is not well known beforehand, but some rough value can be given. If n data values are given, the probability of success is wn.

Advantages and disadvantages

An advantage of RANSAC is its ability to do robust estimation of the model parameters, i.e., it can estimate the parameters with a high degree of accuracy even when outliers are present in the data set. A disadvantage of RANSAC is that there is no upper bound on the time it takes to compute these parameters. If an upper time bound is used, the solution obtained may not be the most optimal one.

RANSAC can only estimate one model for a particular data set. As for any one-model approach when more two (or more) models exist, RANSAC may fail to find either one.

Applications

The RANSAC algorithm is often used in computer vision, e.g., to simultaneously solve the correspondence problem and estimate the fundamental matrix related to a pair of stereo cameras.

References

M. A. Fischler and R. C. Bolles (June 1981). "Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography". Comm. of the ACM 24: 381--395. doi:10.1145/358669.358692.

David A. Forsyth and Jean Ponce (2003). Computer Vision, a modern approach. Prentice Hall. ISBN ISBN 0-13-085198-1.

Richard Hartley and Andrew Zisserman (2003). Multiple View Geometry in Computer Vision, 2nd edition, Cambridge University Press.