原文链接:http://dranger.com/ffmpeg/tutorial01.html

这个链接是一个很好的FFmpeg入门教程,但原文中的代码随着FFmpeg版本不断更新,部分API已经被替换,因此该程序还需要做相应的修改。

运行环境:window 7 + VS2008 + FFmpeg0.10

关于FFmpeg的编译,我自己编译了一个FFmpeg0.10(当前最新版本)作为测试,生成的lib和dll能用,但生成ffmpeg.exe文件却不能运行,由于时间的关系,我也没有去完整的解决这个问题,网上有专门提供windows下编译好的lib和dll(请参考:http://ffmpeg.zeranoe.com/builds/),初学者在windows下开发时,可以先跳过编译的问题,运行一个可以直接看到的小例子,会让自己更有信心枯燥的编译过程。

我对原代码做了一些小修改,原作者提供的代码是输出.ppm文件,但这种格式实在不好预览,于是我自己写了一个生成.bmp文件的函数,调用了windows.h头文件,关于bmp文件格式及转换,可以参考我提到的“参考链接2”和“参考链接3”。

参考链接2:ffmpeg

转 RGB 填充 bitmap

转 RGB 填充 bitmap

参考链接3:BMP文件格式简介

运行环境:window 7 + VS2008 + FFmpeg0.10

关于FFmpeg的编译,我自己编译了一个FFmpeg0.10(当前最新版本)作为测试,生成的lib和dll能用,但生成ffmpeg.exe文件却不能运行,由于时间的关系,我也没有去完整的解决这个问题,网上有专门提供windows下编译好的lib和dll(请参考:http://ffmpeg.zeranoe.com/builds/),初学者在windows下开发时,可以先跳过编译的问题,运行一个可以直接看到的小例子,会让自己更有信心枯燥的编译过程。

自己编译FFmpeg是必不可少了,因为当你需要对FFmpeg的功能进行拓展时(如添加FAAC等),网上未必有人提供相应的lib和dll,正所谓:自助者,天助之, just enjoy it。

我对原代码做了一些小修改,原作者提供的代码是输出.ppm文件,但这种格式实在不好预览,于是我自己写了一个生成.bmp文件的函数,调用了windows.h头文件,关于bmp文件格式及转换,可以参考我提到的“参考链接2”和“参考链接3”。

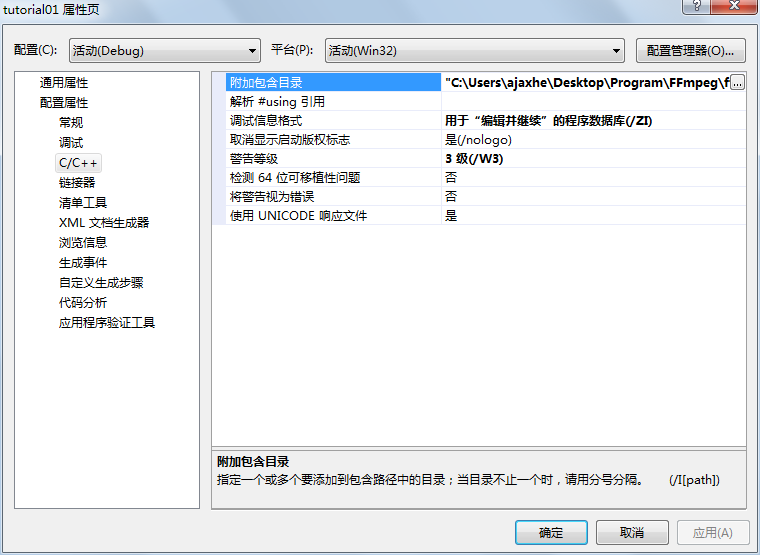

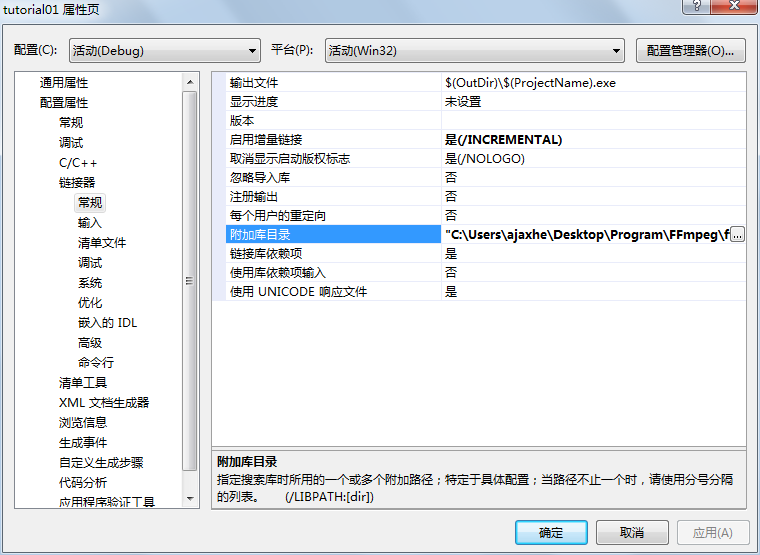

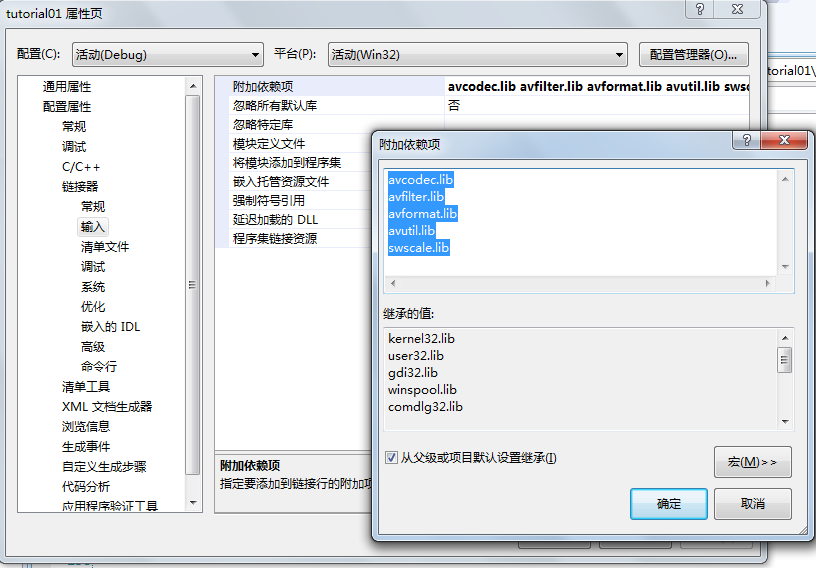

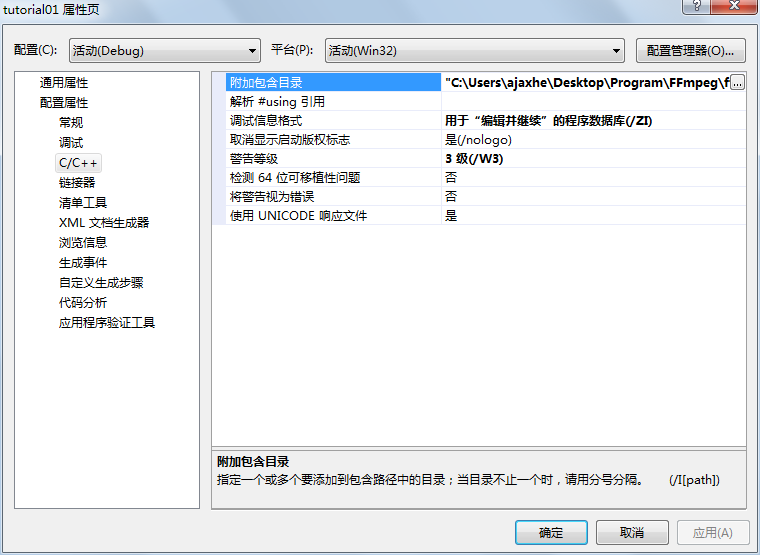

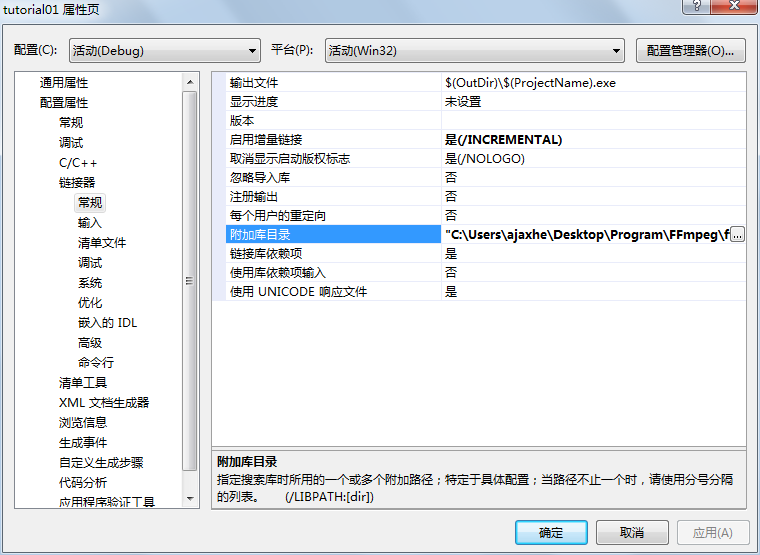

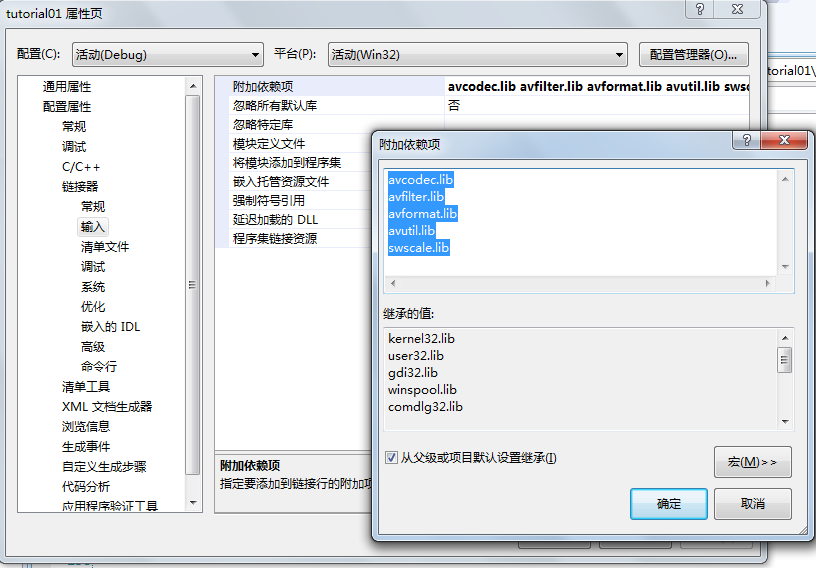

在编译tutorial01前,你需要确认自己头文件以及lib文件的路径是否正确。如下图,你需要针对lib库的路径做相应的修改。

源代码:

// tutorial01.cpp

// Code based on a tutorial by Martin Bohme (boehme@inb.uni-luebeckREMOVETHIS.de)

// Tested on Gentoo, CVS version 5/01/07 compiled with GCC 4.1.1

// A small sample program that shows how to use libavformat and libavcodec to

// read video from a file.

//

// Use

//

// gcc -o tutorial01 tutorial01.c -lavutil -lavformat -lavcodec -lz

//

// to build (assuming libavformat and libavcodec are correctly installed

// your system).

//

// Run using

//

// tutorial01 myvideofile.mpg

//

// to write the first five frames from "myvideofile.mpg" to disk in PPM

// format.

//#include <stdio.h>

extern "C"{

#include <libavcodec/avcodec.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

};

#include <windows.h>

bool saveAsBitmap(AVFrame *pFrameRGB, int width, int height, int iFrame)

{

FILE *pFile = NULL;

BITMAPFILEHEADER bmpheader;

BITMAPINFO bmpinfo;

char fileName[32];

int bpp = 24;

// open file

sprintf(fileName, "frame%d.bmp", iFrame);

pFile = fopen(fileName, "wb");

if (!pFile)

return false;

bmpheader.bfType = ('M' <<8)|'B';

bmpheader.bfReserved1 = 0;

bmpheader.bfReserved2 = 0;

bmpheader.bfOffBits = sizeof(BITMAPFILEHEADER) + sizeof(BITMAPINFOHEADER);

bmpheader.bfSize = bmpheader.bfOffBits + width*height*bpp/8;

bmpinfo.bmiHeader.biSize = sizeof(BITMAPINFOHEADER);

bmpinfo.bmiHeader.biWidth = width;

bmpinfo.bmiHeader.biHeight = -height; //reverse the image

bmpinfo.bmiHeader.biPlanes = 1;

bmpinfo.bmiHeader.biBitCount = bpp;

bmpinfo.bmiHeader.biCompression = BI_RGB;

bmpinfo.bmiHeader.biSizeImage = 0;

bmpinfo.bmiHeader.biXPelsPerMeter = 100;

bmpinfo.bmiHeader.biYPelsPerMeter = 100;

bmpinfo.bmiHeader.biClrUsed = 0;

bmpinfo.bmiHeader.biClrImportant = 0;

fwrite(&bmpheader, sizeof(BITMAPFILEHEADER), 1, pFile);

fwrite(&bmpinfo.bmiHeader, sizeof(BITMAPINFOHEADER), 1, pFile);

uint8_t *buffer = pFrameRGB->data[0];

for (int h=0; h<height; h++)

{

for (int w=0; w<width; w++)

{

fwrite(buffer+2, 1, 1, pFile);

fwrite(buffer+1, 1, 1, pFile);

fwrite(buffer, 1, 1, pFile);

buffer += 3;

}

}

fclose(pFile);

return true;

}

void SaveFrame(AVFrame *pFrame, int width, int height, int iFrame) {

FILE *pFile;

char szFilename[32];

int y;

// Open file

sprintf(szFilename, "frame%d.ppm", iFrame);

pFile=fopen(szFilename, "wb");

if(pFile==NULL)

return;

// Write header

fprintf(pFile, "P6\n%d %d\n255\n", width, height);

// Write pixel data

for(y=0; y<height; y++)

fwrite(pFrame->data[0]+y*pFrame->linesize[0], 1, width*3, pFile);

// Close file

fclose(pFile);

}

int main(int argc, char *argv[]) {

AVFormatContext *pFormatCtx;

int i, videoStream;

AVCodecContext *pCodecCtx;

static struct SwsContext *img_convert_ctx;

AVCodec *pCodec;

AVFrame *pFrame;

AVFrame *pFrameRGB;

AVPacket packet;

int frameFinished;

int numBytes;

uint8_t *buffer;

if(argc < 2) {

printf("Please provide a movie file\n");

return -1;

}

// Register all formats and codecs

av_register_all();

// Open video file

if(av_open_input_file(&pFormatCtx, argv[1], NULL, 0, NULL)!=0)

return false; // Couldn't open file

// Retrieve stream information

if(av_find_stream_info(pFormatCtx)<0)

return false; // Couldn't find stream information

// Dump information about file onto standard error

dump_format(pFormatCtx, 0, argv[1], 0);

// Find the first video stream

videoStream=-1;

for(i=0; i<pFormatCtx->nb_streams; i++)

if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO) {

videoStream=i;

break;

}

if(videoStream==-1)

return false; // Didn't find a video stream

// Get a pointer to the codec context for the video stream

pCodecCtx=pFormatCtx->streams[videoStream]->codec;

// Find the decoder for the video stream

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==NULL) {

fprintf(stderr, "Unsupported codec!\n");

return false; // Codec not found

}

// Open codec

if(avcodec_open(pCodecCtx, pCodec)<0)

return false; // Could not open codec

// Allocate video frame

pFrame=avcodec_alloc_frame();

// Allocate an AVFrame structure

pFrameRGB=avcodec_alloc_frame();

if(pFrameRGB==NULL)

return false;

// Determine required buffer size and allocate buffer

numBytes=avpicture_get_size(PIX_FMT_RGB24, pCodecCtx->width, pCodecCtx->height);

buffer=(uint8_t *)av_malloc(numBytes*sizeof(uint8_t));

// Assign appropriate parts of buffer to image planes in pFrameRGB

// Note that pFrameRGB is an AVFrame, but AVFrame is a superset

// of AVPicture

avpicture_fill((AVPicture *)pFrameRGB, buffer, PIX_FMT_RGB24,

pCodecCtx->width, pCodecCtx->height);

// Read frames and save first five frames to disk

i=0;

while(av_read_frame(pFormatCtx, &packet)>=0) {

// Is this a packet from the video stream?

if(packet.stream_index==videoStream) {

// Decode video frame

avcodec_decode_video2(pCodecCtx, pFrame, &frameFinished, &packet);

// Did we get a video frame?

if(frameFinished) {

// Convert the image into RGB format

if(img_convert_ctx == NULL) {

int w = pCodecCtx->width;

int h = pCodecCtx->height;

img_convert_ctx = sws_getContext(w, h, pCodecCtx->pix_fmt,

w, h, PIX_FMT_RGB24, SWS_BICUBIC,

NULL, NULL, NULL);

if(img_convert_ctx == NULL) {

fprintf(stderr, "Cannot initialize the conversion context!\n");

return false;

}

}

sws_scale(img_convert_ctx, pFrame->data,

pFrame->linesize, 0,

pCodecCtx->height,

pFrameRGB->data, pFrameRGB->linesize);

/*

// Convert the image from its native format to RGB

img_convert((AVPicture *)pFrameRGB, PIX_FMT_RGB24,

(AVPicture*)pFrame, pCodecCtx->pix_fmt, pCodecCtx->width,

pCodecCtx->height);

*/

// Save the frame to disk

if (++i <= 10)

{

//SaveFrame(pFrameRGB, pCodecCtx->width, pCodecCtx->height, i);

saveAsBitmap(pFrameRGB, pCodecCtx->width, pCodecCtx->height, i);

}

}

}

// Free the packet that was allocated by av_read_frame

av_free_packet(&packet);

}

// Free the RGB image

av_free(buffer);

av_free(pFrameRGB);

// Free the YUV frame

av_free(pFrame);

// Close the codec

avcodec_close(pCodecCtx);

// Close the video file

av_close_input_file(pFormatCtx);

return 0;

}